Vertical Divider

A Facebook Mandate for the Fourth Generation of Computing

Facebook’s Mark Zuckerberg has been very vocal in his belief that augmented reality(AR) glasses will eventually replace the smartphone as the primary personal computing device. He also invested $2b of Facebook’s capital to buy Oculus as part of the transition. The smartphone market is a $500b annual market that Facebook doesn’t play in, so $2b is peanuts, if you have a $750b market cap. But VR/AR has been slow to grow, held back by technical challenges and the inability to change the paradigm of both the user interface and the need to put up with an awkward device on the face.

Facebook sees AR as a chance to control an entire hardware-software experience, something it tried but hasn’t yet achieved as they failed to enter the mobile wave. When iPhones and Android phones came into being, Facebook was in no position to produce a smartphone of its own. Today, its apps are wildly popular, but dependent on software made by Apple and Google. AR glasses could provide an opportunity for the company to own the whole system and access the financial and performance benefits that come it. The company, has more than 2 billion users worldwide and loads of advertising money, lots of that cash, and a growing number of people capable of creating its own AR glasses and the virtual experiences they’ll eventually deliver. Facebook is open about how it is building its version of this futuristic device and spoke at its Connect conference last week about the development of Facebook’s AR glasses. Michael Abrash, the chief scientist behind the project and a veteran of the personal computing era, spent much of his career developing games—including the first-person shooter game Quake for id Software in the 1990s. Getting Facebook’s AR glasses off the ground, along with an ecosystem of social AR experiences for Facebook users, is the biggest challenge of his career. Abrash says that Facebook’s AR glasses are still years off and needs breakthroughs to build the displays for the glasses, the mapping system to create a common augmented world that people can share, novel new ways of controlling the device, deep artificial intelligence (AI) models that make sense of things the glasses see and hear, tiny processors powerful enough to run it all and flexible batteries with sufficient charge to run for 18+ hrs. And all that must somehow fit into a pair of glasses svelte and comfortable enough that people will wear them all day. Facebook needs to create a trustworthy environment so people will permit them to store and protect the privacy of the extremely personal data that AR glasses will be collecting. Facebook will have to beat Apple at its own game, where Facebook is at a disadvantage because the data-capturing capabilities of AR glasses give Apple’s privacy record more trustworthy.

When full-fledged AR glasses arrive, they’ll need a very different graphical user interface. The 3D user interface of AR glasses will seem larger and more immersive than the 2D screen and manual control paradigm in everything from the first personal computer to the latest smartphone. With the displays of AR glasses close to the eyes, the user interface must be built around and within the entire visible world.

“All of a sudden, rather than being in a controlled environment with a controlled input, you’re in every environment . . . in your entire life,” Abrash says.

Figure 1: Facebook Goggle Components

Facebook’s Mark Zuckerberg has been very vocal in his belief that augmented reality(AR) glasses will eventually replace the smartphone as the primary personal computing device. He also invested $2b of Facebook’s capital to buy Oculus as part of the transition. The smartphone market is a $500b annual market that Facebook doesn’t play in, so $2b is peanuts, if you have a $750b market cap. But VR/AR has been slow to grow, held back by technical challenges and the inability to change the paradigm of both the user interface and the need to put up with an awkward device on the face.

Facebook sees AR as a chance to control an entire hardware-software experience, something it tried but hasn’t yet achieved as they failed to enter the mobile wave. When iPhones and Android phones came into being, Facebook was in no position to produce a smartphone of its own. Today, its apps are wildly popular, but dependent on software made by Apple and Google. AR glasses could provide an opportunity for the company to own the whole system and access the financial and performance benefits that come it. The company, has more than 2 billion users worldwide and loads of advertising money, lots of that cash, and a growing number of people capable of creating its own AR glasses and the virtual experiences they’ll eventually deliver. Facebook is open about how it is building its version of this futuristic device and spoke at its Connect conference last week about the development of Facebook’s AR glasses. Michael Abrash, the chief scientist behind the project and a veteran of the personal computing era, spent much of his career developing games—including the first-person shooter game Quake for id Software in the 1990s. Getting Facebook’s AR glasses off the ground, along with an ecosystem of social AR experiences for Facebook users, is the biggest challenge of his career. Abrash says that Facebook’s AR glasses are still years off and needs breakthroughs to build the displays for the glasses, the mapping system to create a common augmented world that people can share, novel new ways of controlling the device, deep artificial intelligence (AI) models that make sense of things the glasses see and hear, tiny processors powerful enough to run it all and flexible batteries with sufficient charge to run for 18+ hrs. And all that must somehow fit into a pair of glasses svelte and comfortable enough that people will wear them all day. Facebook needs to create a trustworthy environment so people will permit them to store and protect the privacy of the extremely personal data that AR glasses will be collecting. Facebook will have to beat Apple at its own game, where Facebook is at a disadvantage because the data-capturing capabilities of AR glasses give Apple’s privacy record more trustworthy.

When full-fledged AR glasses arrive, they’ll need a very different graphical user interface. The 3D user interface of AR glasses will seem larger and more immersive than the 2D screen and manual control paradigm in everything from the first personal computer to the latest smartphone. With the displays of AR glasses close to the eyes, the user interface must be built around and within the entire visible world.

“All of a sudden, rather than being in a controlled environment with a controlled input, you’re in every environment . . . in your entire life,” Abrash says.

Figure 1: Facebook Goggle Components

Because of that immersion, and the idea that the user interface of personal technology will move around the wearer in the real world, Facebook believes wearers of AR glasses require communication interfaces for hand gesturing (detected by hand-tracking cameras on the glasses), and voice commands (picked up by a microphone array in the device). But, Abrash points out, those ways of controlling the technology may be awkward during social situations. For example, making hand gestures or using voice commands probably won’t work well when talking to someone on the street. The other person might think you’re crazy or, if they’re aware of the glasses, nervous that you may be pulling up information about them. A more discreet way of controlling the glasses will be required.

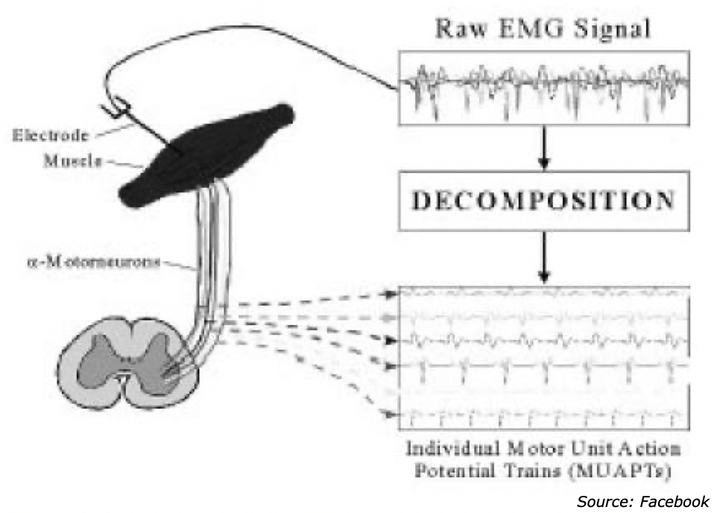

“The eye-tracking technology in the glasses’ cameras might be more effective in such situations. You may be able to choose from items in a menu projected inside the glasses by resting your eyes on the thing you want. But even that might be noticed by a person standing in front of you. Making small movements with your fingers might be even more discreet”, Abrash says, referring to a new input method called electromyography or EMG, which uses electrical signals from the brain to control the functions of a device. Electromyography (EMG) signals can be used for clinical/biomedical applications, Evolvable Hardware Chip (EHW) development, and modern human computer interaction. EMG signals acquired from muscles require advanced methods for detection, decomposition, processing, and classification. The purpose of this paper is to illustrate the various methodologies and algorithms for EMG signal analysis to provide efficient and effective ways of understanding the signal and its nature. We further point up some of the hardware implementations using EMG focusing on applications related to prosthetic hand control, grasp recognition, and human computer interaction. A comparison study is also given to show performance of various EMG signal analysis methods. This paper provides researchers a good understanding of EMG signal and its analysis procedures. This knowledge will help them develop more powerful, flexible, and efficient applications. Biomedical signal means a collective electrical signal acquired from any organ that represents a physical variable of interest. This signal is normally a function of time and is describable in terms of its amplitude, frequency and phase. The EMG signal is a biomedical signal that measures electrical currents generated in muscles during its contraction representing neuromuscular activities. The nervous system always controls the muscle activity (contraction/relaxation). Hence, the EMG signal is a complicated signal, which is controlled by the nervous system and is dependent on the anatomical and physiological properties of muscles. EMG signal acquires noise while traveling through different tissues. Moreover, the EMG detector, particularly if it is at the surface of the skin, collects signals from different motor units at a time which may generate interaction of different signals. Detection of EMG signals with powerful and advance methodologies is becoming a very important requirement in biomedical engineering. The main reason for the interest in EMG signal analysis is in clinical diagnosis and biomedical applications. The field of management and rehabilitation of motor disability is identified as one of the important application areas. The shapes and firing rates of Motor Unit Action Potentials (MUAPs) in EMG signals provide an important source of information for the diagnosis of neuromuscular disorders. Once appropriate algorithms and methods for EMG signal analysis are readily available, the nature and characteristics of the signal can be properly understood, and hardware implementations can be made for various EMG signal related applications.

There has been recent progress in developing better algorithms, upgrading existing methodologies, improving detection techniques to reduce noise, and acquiring accurate EMG signals. Few hardware implementations have been done for prosthetic hand control, grasp recognition, and human-machine interaction. It is quite important to carry out an investigation to classify the actual problems of EMG signals analysis and justify the accepted measures. There are still limitations in detection and characterization of existing nonlinearities in the surface electromyography (sEMG), a special technique for studying muscle signals) signal, estimation of the phase, acquiring exact information due to derivation from normality. Traditional system reconstruction algorithms have various limitations and considerable computational complexity and many show high variance. Recent advances in technologies of signal processing and mathematical models have made it practical to develop advanced EMG detection and analysis techniques. Various mathematical techniques and Artificial Intelligence (AI) have received extensive attraction. Mathematical models include wavelet transform, time-frequency approaches, Fourier transform, Wigner-Ville Distribution (WVD), statistical measures, and higher-order statistics. AI approaches towards signal recognition include Artificial Neural Networks (ANN), dynamic recurrent neural networks (DRNN), and fuzzy logic system. Genetic Algorithm (GA) has also been applied in evolvable hardware chip for the mapping of EMG inputs to desired hand actions. Wavelet transform is well suited to non-stationary signals like EMG. Time-frequency approach using WVD in hardware could allow for a real-time instrument that can be used for specific motor unit training in biofeedback situations. Higher-order statistical (HOS) methods may be used for analyzing the EMG signal due to the unique properties of HOS applied to random time series. The bispectrum or third-order spectrum has the advantage of suppressing Gaussian noise.

One of the toughest problems of creating AR experiences—especially social ones—is mapping a common 3D graphical world to the real world so that everyone sees the same AR content. Niantic used such a map in its 2016 Pokémon Go AR game to let all the players see the same Pokémon in the same physical places during game play.

Facebook’s map is called LiveMaps, and it will serve as a sort of superstructure from which all its AR experiences will be built. A map is needed so tithe glasses don’t have to work hard to orient themselves. “You really want to reconstruct the space around you and retain it in what we’re calling LiveMaps, because then your glasses don’t constantly have to reconstruct it, which is very power intensive,” Abrash says. He says the glasses can use the map as cached location data, then all the device has to do is look for changes to the map and update them with new data. Abrash says LiveMaps will organize its data in three main layers: location, index, and content.

The location layer is a shared coordinate system of all the locations in the world where AR objects might be placed, or where virtual meetings with avatars might occur. This data allows for the placement of “persistent” 3D graphical objects, meaning objects that stay anchored to specific places in the physical world. For example, Google Maps is putting persistent digital direction pointers near streets and landmarks (viewed through a phone camera) to help people find their way.

But LiveMaps goes much further than public spaces. It maps private places where the AR glasses are worn, including the rooms in the home—anywhere virtual objects are placed or held and virtual hangouts with the avatars of friends.

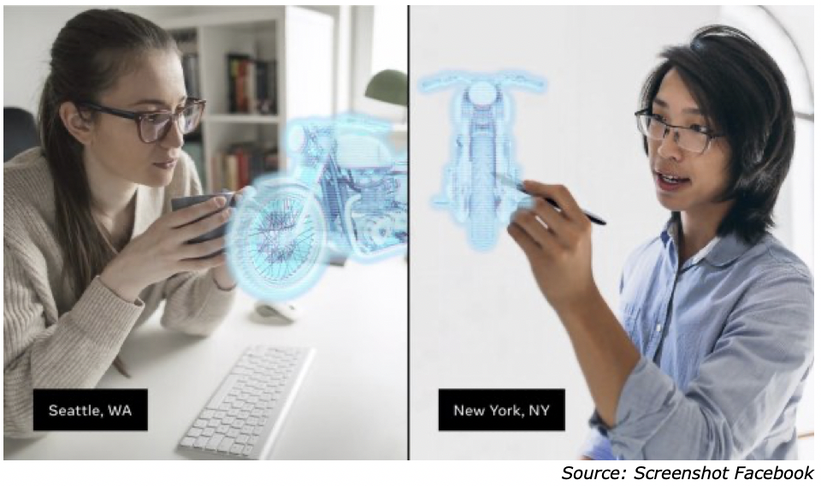

Figure 2: Working with Holographics

“The eye-tracking technology in the glasses’ cameras might be more effective in such situations. You may be able to choose from items in a menu projected inside the glasses by resting your eyes on the thing you want. But even that might be noticed by a person standing in front of you. Making small movements with your fingers might be even more discreet”, Abrash says, referring to a new input method called electromyography or EMG, which uses electrical signals from the brain to control the functions of a device. Electromyography (EMG) signals can be used for clinical/biomedical applications, Evolvable Hardware Chip (EHW) development, and modern human computer interaction. EMG signals acquired from muscles require advanced methods for detection, decomposition, processing, and classification. The purpose of this paper is to illustrate the various methodologies and algorithms for EMG signal analysis to provide efficient and effective ways of understanding the signal and its nature. We further point up some of the hardware implementations using EMG focusing on applications related to prosthetic hand control, grasp recognition, and human computer interaction. A comparison study is also given to show performance of various EMG signal analysis methods. This paper provides researchers a good understanding of EMG signal and its analysis procedures. This knowledge will help them develop more powerful, flexible, and efficient applications. Biomedical signal means a collective electrical signal acquired from any organ that represents a physical variable of interest. This signal is normally a function of time and is describable in terms of its amplitude, frequency and phase. The EMG signal is a biomedical signal that measures electrical currents generated in muscles during its contraction representing neuromuscular activities. The nervous system always controls the muscle activity (contraction/relaxation). Hence, the EMG signal is a complicated signal, which is controlled by the nervous system and is dependent on the anatomical and physiological properties of muscles. EMG signal acquires noise while traveling through different tissues. Moreover, the EMG detector, particularly if it is at the surface of the skin, collects signals from different motor units at a time which may generate interaction of different signals. Detection of EMG signals with powerful and advance methodologies is becoming a very important requirement in biomedical engineering. The main reason for the interest in EMG signal analysis is in clinical diagnosis and biomedical applications. The field of management and rehabilitation of motor disability is identified as one of the important application areas. The shapes and firing rates of Motor Unit Action Potentials (MUAPs) in EMG signals provide an important source of information for the diagnosis of neuromuscular disorders. Once appropriate algorithms and methods for EMG signal analysis are readily available, the nature and characteristics of the signal can be properly understood, and hardware implementations can be made for various EMG signal related applications.

There has been recent progress in developing better algorithms, upgrading existing methodologies, improving detection techniques to reduce noise, and acquiring accurate EMG signals. Few hardware implementations have been done for prosthetic hand control, grasp recognition, and human-machine interaction. It is quite important to carry out an investigation to classify the actual problems of EMG signals analysis and justify the accepted measures. There are still limitations in detection and characterization of existing nonlinearities in the surface electromyography (sEMG), a special technique for studying muscle signals) signal, estimation of the phase, acquiring exact information due to derivation from normality. Traditional system reconstruction algorithms have various limitations and considerable computational complexity and many show high variance. Recent advances in technologies of signal processing and mathematical models have made it practical to develop advanced EMG detection and analysis techniques. Various mathematical techniques and Artificial Intelligence (AI) have received extensive attraction. Mathematical models include wavelet transform, time-frequency approaches, Fourier transform, Wigner-Ville Distribution (WVD), statistical measures, and higher-order statistics. AI approaches towards signal recognition include Artificial Neural Networks (ANN), dynamic recurrent neural networks (DRNN), and fuzzy logic system. Genetic Algorithm (GA) has also been applied in evolvable hardware chip for the mapping of EMG inputs to desired hand actions. Wavelet transform is well suited to non-stationary signals like EMG. Time-frequency approach using WVD in hardware could allow for a real-time instrument that can be used for specific motor unit training in biofeedback situations. Higher-order statistical (HOS) methods may be used for analyzing the EMG signal due to the unique properties of HOS applied to random time series. The bispectrum or third-order spectrum has the advantage of suppressing Gaussian noise.

One of the toughest problems of creating AR experiences—especially social ones—is mapping a common 3D graphical world to the real world so that everyone sees the same AR content. Niantic used such a map in its 2016 Pokémon Go AR game to let all the players see the same Pokémon in the same physical places during game play.

Facebook’s map is called LiveMaps, and it will serve as a sort of superstructure from which all its AR experiences will be built. A map is needed so tithe glasses don’t have to work hard to orient themselves. “You really want to reconstruct the space around you and retain it in what we’re calling LiveMaps, because then your glasses don’t constantly have to reconstruct it, which is very power intensive,” Abrash says. He says the glasses can use the map as cached location data, then all the device has to do is look for changes to the map and update them with new data. Abrash says LiveMaps will organize its data in three main layers: location, index, and content.

The location layer is a shared coordinate system of all the locations in the world where AR objects might be placed, or where virtual meetings with avatars might occur. This data allows for the placement of “persistent” 3D graphical objects, meaning objects that stay anchored to specific places in the physical world. For example, Google Maps is putting persistent digital direction pointers near streets and landmarks (viewed through a phone camera) to help people find their way.

But LiveMaps goes much further than public spaces. It maps private places where the AR glasses are worn, including the rooms in the home—anywhere virtual objects are placed or held and virtual hangouts with the avatars of friends.

Figure 2: Working with Holographics

The index layer in LiveMaps captures the properties of the physical objects in a space, along with a lot of other metadata, including what an object is used for, how it interacts with other objects, what it’s made of, and how it moves. Knowing all this is critical for placing AR objects in ways that look natural and obey the laws of physics. “The same data allows put your friend’s avatar across the table from you in your apartment without having their (virtual) body cut in half by the (real) tabletop”, Abrash says. “The index layer is being updated constantly. For instance, if you were wearing your AR glasses when you came home, the index layer might capture where the cameras saw you put down your keys.”

The third layer, content, contains all the locations of the digital AR objects placed anywhere—public or private—in a user’s world. But, Abrash says, it’s really a lot more than that. This layer, he explains, “stores the “relationships, histories, and predictions for the entities and events that matter personally to each of us, whether they’re anchored in the real world or not.” That means that this layer could capture anything from a virtual painting on a wall to a list of favorite restaurants, to the details of an upcoming business trip. This layer also links to knowledge graphs that define the concepts of “painting,” “restaurant,” or “business trip,” Abrash says. “In short, it’s the set of concepts and categories, and their properties and the relations between them, that model your life to whatever extent you desire, and it can at any time surface the information that’s personally and contextually relevant to you.” LiveMaps is really more like a map of your life. And it’s being updated constantly based on where you go and what you do.

The content layer in LiveMaps is built using all the information the cameras, sensors, and microphones on the AR glasses collect, habits, and relationships. That’s a mountain of data to give a company like Facebook, which doesn’t have a strong track record of protecting people’s privacy. But with the AR glasses, Facebook could offer a new and different service that’s built off all this data. It can all be fed into powerful AI models that can then make deep inferences about what information a person might need or things you might want to do in various contexts. This makes possible a personal digital assistant that knows you, and what you might want, far better than any assistant you have now. “It’s like . . . a friend sitting on your shoulder that can see your life from your own egocentric view and help you,” Abrash says. “[It] sees your life from your perspective, so all of a sudden it can know the things that you would know if you were trying to help yourself.”

Since AR glasses are hands-free, a digital assistance is mandatory; navigating through lots of menus, or typing in or speaking explicit instructions to the device, won’t be possible The software will have to use what it knows about the wearer, at the present and the with the proper context to proactively display the needed information you might need. Abrash gives the example of eye-tracking cameras detecting that your eyes have been resting on a certain kind of car for longer than a glance. Intuiting interest and intent, the glasses software might overlay information or graphics, such as data about its price or fuel economy. It might offer a short list of options representing educated guesses of information wanted or possible actions at that moment. The wearer would then make a selection with a glance of an eye through an eye tracker or with a twitch of the finger picked up by an EMG bracelet. “EMG is really the ideal AR core input because it can be completely low friction,” Abrash says. “You have this thing on and all you have to do is wiggle a finger a millimeter.”

The assistant would also know a lot about the wearer’s habits, tastes, and choices, or even how to interact with people in certain social situations, which would further inform the details it proactively displays or the options it suggests. The assistant, Abrash says, might even ask you what you think of the questions it asks you or the choices it’s proposed. These questions, too, could be answered with a very quick input. “So you’re now in the loop where you can be training it with very low friction,” Abrash says. “And because the interaction is much more frequent, you and the assistant can both get better at working together, which really can happen.”

Abrash says that because the glasses are seeing and recording and understanding what you see and do all day long it can provide far more, and far better, data to an AI assistant than a smartphone that spends most of its time in your pocket. He says this is one aspect of AR glasses that puts them in a whole different paradigm from the smartphone.

The wearer would have access to a wealth of data e collected in LiveMaps is stored on a Facebook cloud, so 5G becomes mandatory. Facebook says the personal data it captures today helps it personalize a user’s experience on the social network. But the real reason it collects that data is so that it knows enough to fit people into narrowly defined audiences that advertisers can target. The sensors, microphones, and cameras on AR glasses might give Facebook a far more refined look of what the buying tendencies are, and the product or service needed even before the wearer knows it.

Andrew Bosworth, vice president and head of Facebook Reality Labs, said the company has not started thinking about how Facebook intends to use the highly detailed and personal data collected by AR glasses to target ads, on Facebook.com or within the glasses.

Abrash has a lot to learn about building the LiveMaps structure that will store this wealth of data first by learning how to do the mapping and then indexing the world, and “building semantics” to start to understand the objects surrounding the wearer.

“To be able to build the capabilities for LiveMaps,” he adds, “[we need] to understand exactly what it is that we need to retain, how hard it is, what kinds of changes there are in the world . . . and how we can do the synchronization and keep things up to date”, which is part of the reason why 100 or so Facebook employees will soon be wearing augmented reality research glasses at work, at home, and in public in the San Francisco Bay Area and in Seattle. The glasses have no displays and, Facebook says, are not prototypes of a future product. The employees participating in “Project Aria” will use their test glasses to capture video and audio from the wearer’s point of view while collecting data from the sensors in the glasses that track where the wearer’s eyes are going.

Figure 3: Facebook Employee Using Project Aria

The third layer, content, contains all the locations of the digital AR objects placed anywhere—public or private—in a user’s world. But, Abrash says, it’s really a lot more than that. This layer, he explains, “stores the “relationships, histories, and predictions for the entities and events that matter personally to each of us, whether they’re anchored in the real world or not.” That means that this layer could capture anything from a virtual painting on a wall to a list of favorite restaurants, to the details of an upcoming business trip. This layer also links to knowledge graphs that define the concepts of “painting,” “restaurant,” or “business trip,” Abrash says. “In short, it’s the set of concepts and categories, and their properties and the relations between them, that model your life to whatever extent you desire, and it can at any time surface the information that’s personally and contextually relevant to you.” LiveMaps is really more like a map of your life. And it’s being updated constantly based on where you go and what you do.

The content layer in LiveMaps is built using all the information the cameras, sensors, and microphones on the AR glasses collect, habits, and relationships. That’s a mountain of data to give a company like Facebook, which doesn’t have a strong track record of protecting people’s privacy. But with the AR glasses, Facebook could offer a new and different service that’s built off all this data. It can all be fed into powerful AI models that can then make deep inferences about what information a person might need or things you might want to do in various contexts. This makes possible a personal digital assistant that knows you, and what you might want, far better than any assistant you have now. “It’s like . . . a friend sitting on your shoulder that can see your life from your own egocentric view and help you,” Abrash says. “[It] sees your life from your perspective, so all of a sudden it can know the things that you would know if you were trying to help yourself.”

Since AR glasses are hands-free, a digital assistance is mandatory; navigating through lots of menus, or typing in or speaking explicit instructions to the device, won’t be possible The software will have to use what it knows about the wearer, at the present and the with the proper context to proactively display the needed information you might need. Abrash gives the example of eye-tracking cameras detecting that your eyes have been resting on a certain kind of car for longer than a glance. Intuiting interest and intent, the glasses software might overlay information or graphics, such as data about its price or fuel economy. It might offer a short list of options representing educated guesses of information wanted or possible actions at that moment. The wearer would then make a selection with a glance of an eye through an eye tracker or with a twitch of the finger picked up by an EMG bracelet. “EMG is really the ideal AR core input because it can be completely low friction,” Abrash says. “You have this thing on and all you have to do is wiggle a finger a millimeter.”

The assistant would also know a lot about the wearer’s habits, tastes, and choices, or even how to interact with people in certain social situations, which would further inform the details it proactively displays or the options it suggests. The assistant, Abrash says, might even ask you what you think of the questions it asks you or the choices it’s proposed. These questions, too, could be answered with a very quick input. “So you’re now in the loop where you can be training it with very low friction,” Abrash says. “And because the interaction is much more frequent, you and the assistant can both get better at working together, which really can happen.”

Abrash says that because the glasses are seeing and recording and understanding what you see and do all day long it can provide far more, and far better, data to an AI assistant than a smartphone that spends most of its time in your pocket. He says this is one aspect of AR glasses that puts them in a whole different paradigm from the smartphone.

The wearer would have access to a wealth of data e collected in LiveMaps is stored on a Facebook cloud, so 5G becomes mandatory. Facebook says the personal data it captures today helps it personalize a user’s experience on the social network. But the real reason it collects that data is so that it knows enough to fit people into narrowly defined audiences that advertisers can target. The sensors, microphones, and cameras on AR glasses might give Facebook a far more refined look of what the buying tendencies are, and the product or service needed even before the wearer knows it.

Andrew Bosworth, vice president and head of Facebook Reality Labs, said the company has not started thinking about how Facebook intends to use the highly detailed and personal data collected by AR glasses to target ads, on Facebook.com or within the glasses.

Abrash has a lot to learn about building the LiveMaps structure that will store this wealth of data first by learning how to do the mapping and then indexing the world, and “building semantics” to start to understand the objects surrounding the wearer.

“To be able to build the capabilities for LiveMaps,” he adds, “[we need] to understand exactly what it is that we need to retain, how hard it is, what kinds of changes there are in the world . . . and how we can do the synchronization and keep things up to date”, which is part of the reason why 100 or so Facebook employees will soon be wearing augmented reality research glasses at work, at home, and in public in the San Francisco Bay Area and in Seattle. The glasses have no displays and, Facebook says, are not prototypes of a future product. The employees participating in “Project Aria” will use their test glasses to capture video and audio from the wearer’s point of view while collecting data from the sensors in the glasses that track where the wearer’s eyes are going.

Figure 3: Facebook Employee Using Project Aria

“We’ve just got to get it out of the lab and get it into real-world conditions, in terms of [learning about] light, in terms of weather, and start seeing what that data looks like with the long-term goal of helping us inform [our product],” Bosworth says.

The data is also meant to help the engineers figure out how LiveMapscan enable the kind of AR experiences they want without requiring a ton of computing power in the AR device itself. Facebook is creating LiveMaps from scratch. There is currently no data to inform or personalize any AR experiences. Eventually the data that populates the three layers of LiveMaps will be crowdsourced from users, and there will be tight controls on which parts of that data are public, which are private to the user, and which parts the user can choose to share with others (like friends and family). But for now, it’s up to Facebook to start generating a base set of data to work with.

“Initially there has to be [professional] data capture to bootstrap something like this,” Abrash says. “Because there’s a chicken and egg problem.” In other words, Facebook must start the process by collecting enough mapping and indexing data to create the initial experiences for users. Without those there will be no users to begin contributing to the data that makes up LiveMaps. “Once people are wearing these glasses, crowdsourcing has to be the primary way that this works,” Abrash says. “There is no other way to scale.”

Abrash says the biggest hardware challenge is building a display system that enables 3D graphics while weighing very little and requiring only a small amount of power, with enough brightness and contrast to display graphics that can compete with the natural light coming in from the outside world. It must have a field of view sufficient to cover most or all of the user’s field of vision, something that’s not yet been seen in existing AR headsets or glasses.

The sheer number of components needed to make AR glasses function will be hard to squeeze into a design that you wouldn’t mind wearing around all day. This includes cameras to pinpoint your physical location, cameras to track the movement of your eyes to see what you’re looking at, displays large enough to overlay the full breadth of your field of view, processors to power the displays and the computer vision AI that identifies objects, and a small and efficient power supply. The processors involved can generate a lot of heat on the head, too, and right now there’s no cooling mechanism light and efficient enough to cool everything down.

Everyone who is working on a real pair of AR glasses is trying to find ways of overcoming these challenges. One way may be to offload some of the processing power and power supply to an external device, like a smartphone or some other small, wearable, dedicated device (like Magic Leap’s “puck“). Facebook’s eventual AR glasses, which could be five years away, may ultimately consist of three pieces—the glasses themselves, an external device, and a bracelet for EMG. It has the same number of positive and negative charges. But in the resting state, the nerve cell membrane is polarized due to differences in the concentrations and ionic composition across the plasma membrane. A potential difference exists between the intra-cellular and extra-cellular fluids of the cell. In response to a stimulus from the neuron, a muscle fiber depolarizes as the signal propagates along its surface and the fiber twitches. This depolarization, accompanied by a movement of ions, generates an electric field near each muscle fiber. An EMG signal is the train of Motor Unit Action Potential (MUAP) showing the muscle response to neural stimulation. The EMG signal appears random in nature and is generally modeled as a filtered impulse process where the MUAP is the filter and the impulse process stands for the neuron pulses, often modeled as a Poisson process. The next figure shows the process of acquiring EMG signal and the decomposition to achieve the MUAPs.

Figure 4: EMG Components

The data is also meant to help the engineers figure out how LiveMapscan enable the kind of AR experiences they want without requiring a ton of computing power in the AR device itself. Facebook is creating LiveMaps from scratch. There is currently no data to inform or personalize any AR experiences. Eventually the data that populates the three layers of LiveMaps will be crowdsourced from users, and there will be tight controls on which parts of that data are public, which are private to the user, and which parts the user can choose to share with others (like friends and family). But for now, it’s up to Facebook to start generating a base set of data to work with.

“Initially there has to be [professional] data capture to bootstrap something like this,” Abrash says. “Because there’s a chicken and egg problem.” In other words, Facebook must start the process by collecting enough mapping and indexing data to create the initial experiences for users. Without those there will be no users to begin contributing to the data that makes up LiveMaps. “Once people are wearing these glasses, crowdsourcing has to be the primary way that this works,” Abrash says. “There is no other way to scale.”

Abrash says the biggest hardware challenge is building a display system that enables 3D graphics while weighing very little and requiring only a small amount of power, with enough brightness and contrast to display graphics that can compete with the natural light coming in from the outside world. It must have a field of view sufficient to cover most or all of the user’s field of vision, something that’s not yet been seen in existing AR headsets or glasses.

The sheer number of components needed to make AR glasses function will be hard to squeeze into a design that you wouldn’t mind wearing around all day. This includes cameras to pinpoint your physical location, cameras to track the movement of your eyes to see what you’re looking at, displays large enough to overlay the full breadth of your field of view, processors to power the displays and the computer vision AI that identifies objects, and a small and efficient power supply. The processors involved can generate a lot of heat on the head, too, and right now there’s no cooling mechanism light and efficient enough to cool everything down.

Everyone who is working on a real pair of AR glasses is trying to find ways of overcoming these challenges. One way may be to offload some of the processing power and power supply to an external device, like a smartphone or some other small, wearable, dedicated device (like Magic Leap’s “puck“). Facebook’s eventual AR glasses, which could be five years away, may ultimately consist of three pieces—the glasses themselves, an external device, and a bracelet for EMG. It has the same number of positive and negative charges. But in the resting state, the nerve cell membrane is polarized due to differences in the concentrations and ionic composition across the plasma membrane. A potential difference exists between the intra-cellular and extra-cellular fluids of the cell. In response to a stimulus from the neuron, a muscle fiber depolarizes as the signal propagates along its surface and the fiber twitches. This depolarization, accompanied by a movement of ions, generates an electric field near each muscle fiber. An EMG signal is the train of Motor Unit Action Potential (MUAP) showing the muscle response to neural stimulation. The EMG signal appears random in nature and is generally modeled as a filtered impulse process where the MUAP is the filter and the impulse process stands for the neuron pulses, often modeled as a Poisson process. The next figure shows the process of acquiring EMG signal and the decomposition to achieve the MUAPs.

Figure 4: EMG Components

That technology is being developed by CTRL-labs that Facebook acquired in 2019. CTRL-labs researchers have been testing the possibility of using a bracelet device to intercept signals from the brain sent down through the motor nerves on the wrist to control the movements of the fingers. In theory, a person wearing such a bracelet could be taught to control aspects of the AR glasses’ user interface with certain finger movements. But the actual movements of the muscles in the fingers would be secondary: The bracelet would capture the electrical signals being sent from the brain before they even reach the fingers, and then translate those signals into inputs that the software could understand. “EMG can be made highly reliable, like a mouse click or key press,” Abrash said during his speech at the Connect conference, which was held virtually this year. “EMG will provide just one or two bits of what I’ll call neural-click, the equivalent of tapping on a button or pressing and then releasing it, but it will quickly progress to richer controls.”

Abrash says the brain’s signals are stronger and easier to read at the wrist, and far less ambiguous than when read using sensors on the head. As the technology advances, the bracelet may be able to capture just the intent of the user to move their finger, and actual physical movement would be unnecessary.

The technology isn’t exactly reading the user’s thoughts; it’s analyzing the electrical signals from the brain that are generated by their thoughts. But some may not make that distinction. When Abrash talked about EMG during his Connect keynote, several people watching the livestream noted in the comments section: “Facebook is reading my mind!”

Abrash says the brain’s signals are stronger and easier to read at the wrist, and far less ambiguous than when read using sensors on the head. As the technology advances, the bracelet may be able to capture just the intent of the user to move their finger, and actual physical movement would be unnecessary.

The technology isn’t exactly reading the user’s thoughts; it’s analyzing the electrical signals from the brain that are generated by their thoughts. But some may not make that distinction. When Abrash talked about EMG during his Connect keynote, several people watching the livestream noted in the comments section: “Facebook is reading my mind!”

|

Contact Us

|

Barry Young

|